Deep Dive into the Neuro-Symbolic Autonomy Framework

Current Features and System Capabilities

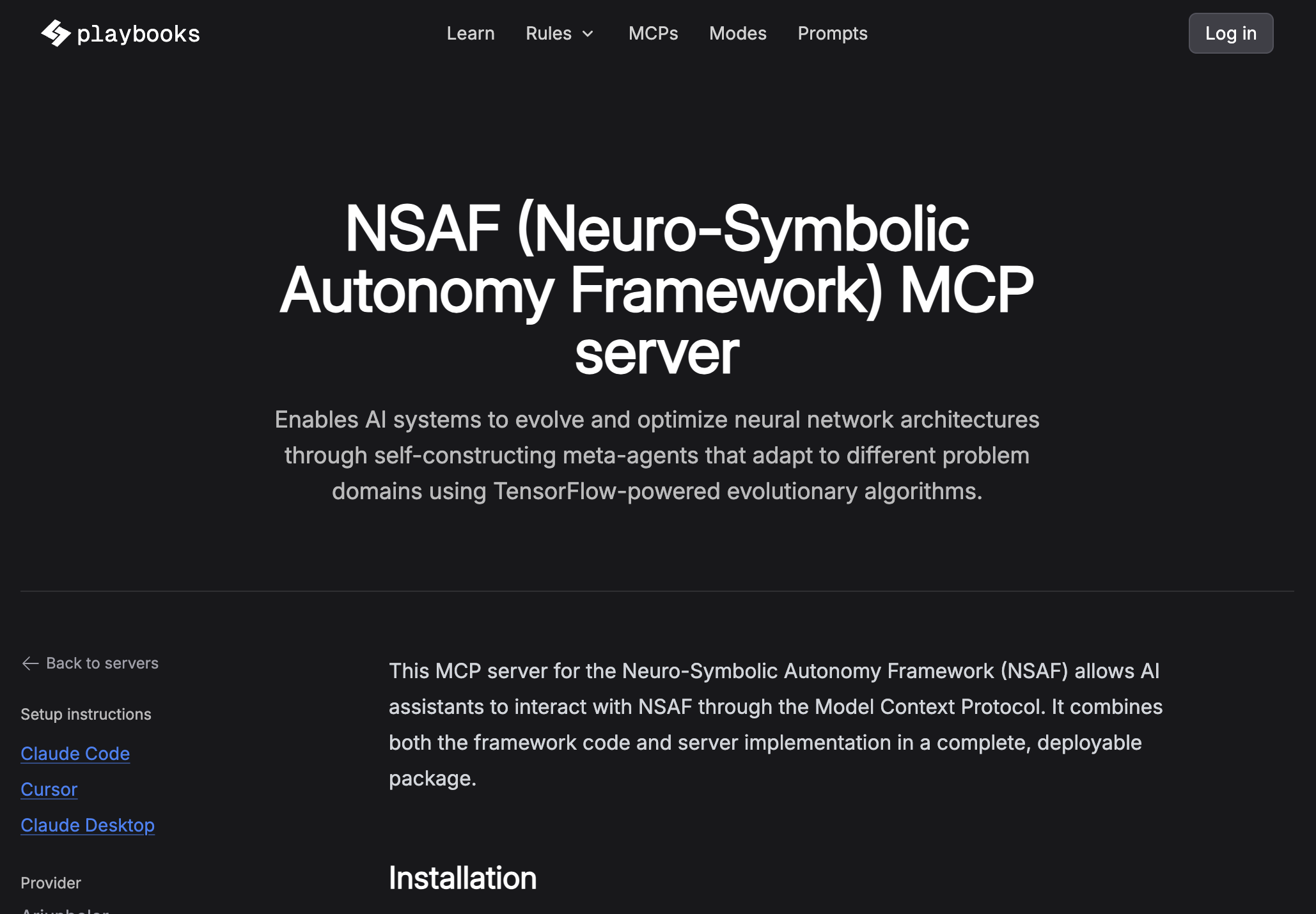

Neuro-Symbolic Autonomy Framework (NSAF): The NSAF MCP Server integrates neural, symbolic, and autonomous learning methods into a unified system for building evolving AI agents. It demonstrates the Self-Constructing Meta-Agents (SCMA) component of NSAF, which allows AI agents to self-design and evolve new agent architectures using Generative Architecture Models. In practice, NSAF’s SCMA creates a population of “meta-agents” (neural network models with various architectures) and optimizes them through simulated evolution.

Key Features and Tools: The NSAF MCP Server exposes its capabilities through tools that AI assistants (like Anthropic’s Claude or others supporting MCP) can invoke. Major features include:

-

Evolutionary Agent Optimization: Run an evolutionary loop to optimize a population of AI agent architectures with customizable parameters (population size, generations, mutation/crossover rates, etc.). This

run_nsaf_evolutiontool trains and evolves multiple neural-network agents over generations, producing an optimized “best” agent at the end. -

Architecture Comparison: Compare different predefined agent architectures (e.g. simple, medium, complex) using the

compare_nsaf_agentstool. This helps evaluate how network topology or complexity affects performance. -

Integrated NSAF Framework: The server includes the full NSAF framework code so it runs out-of-the-box without additional setup. This means all core NSAF classes (for configuration, meta-agent definition, evolution algorithm, etc.) are bundled.

-

Simplified MCP Protocol: Implements a lightweight Model Context Protocol (MCP) interface (without needing the official MCP SDK). AI assistants communicate with the server via this protocol, allowing two-way integration. The server can be installed as an NPM package and added to an assistant’s toolset configuration (e.g. in Claude’s settings) so that the assistant can launch it and send commands.

-

AI Assistant Orchestration: Allows AI assistants to invoke NSAF capabilities from a conversation. For example, an assistant can call

run_nsaf_evolutionwith given parameters to delegate heavy learning tasks to NSAF, then receive the results (such as performance metrics or a summary of the best evolved model). This effectively offloads complex model-building workflows to the MCP server while the assistant orchestrates the high-level workflow.

Meta-Agent Workflows: Under the hood, NSAF uses meta-agents that can design and train neural networks on the fly. Users (or the AI assistant) can customize various aspects of the process:

-

Configurable Evolution – Users can set parameters like

population_size,generations, mutation/crossover rates, etc., to control the evolutionary search. The system uses these to breed and evaluate agents over multiple generations. -

Fitness Evaluation – The evolution process uses a fitness function to select the best agents. By default, a simple metric (like negative MSE on a task) can serve as fitness, but the NSAF framework allows custom fitness definitions in code (when using NSAF as a Python library).

-

Architecture Templates – NSAF comes with predefined architecture complexities (“simple”, “medium”, “complex”) that vary network depth/layers. Users can also supply a custom architecture structure (e.g. specific layer sizes, activations) when creating a

MetaAgent. -

Visualization and Persistence – The framework can visualize agents and evolution progress (e.g. saving model diagrams) and save or load agent models. This helps in analyzing the evolved solutions or reusing them later.

Overall, the NSAF MCP Server’s current capabilities center on automated neural architecture search and optimization. It effectively orchestrates a population of learning agents—initializing them, training/evaluating each, and applying genetic operations (mutation, crossover) to produce improved offspring—under the direction of either default settings or user-specified parameters. By exposing these functions through MCP, an AI assistant can trigger complex workflows (like “find me an optimal neural network for this data”) and let the server handle the heavy lifting.